N4-Fields: Neural Network Nearest Neighbor Fields for Image Transforms

Abstract

We propose a new architecture for difficult image processing operations, such as natural edge detection or thin object segmentation. The architecture is based on a simple combination of convolutional neural networks with the nearest neighbor search.

We focus our attention on the situations when the desired image transformation is too hard for a neural network to learn explicitly. We show that in such situations, the use of the nearest neighbor search on top of the network output allows to improve the results considerably and to account for the underfitting effect during the neural network training. The approach is validated on three challenging benchmarks, where the performance of the proposed architecture matches or exceeds the state-of-the-art.

Results

Here you can find extended results for the three datasets mentioned in the paper:

You can switch between the datasets by clicking the labels on the top of the webpage. Within the dataset webpages, you can click on any row to see the zoomin into the results. You can also sort the images according to different performance numbers.For all three datasets we provide the current state-of-the-art results of Dollar et al. [ICCV2013] for reference (based on the author's code).

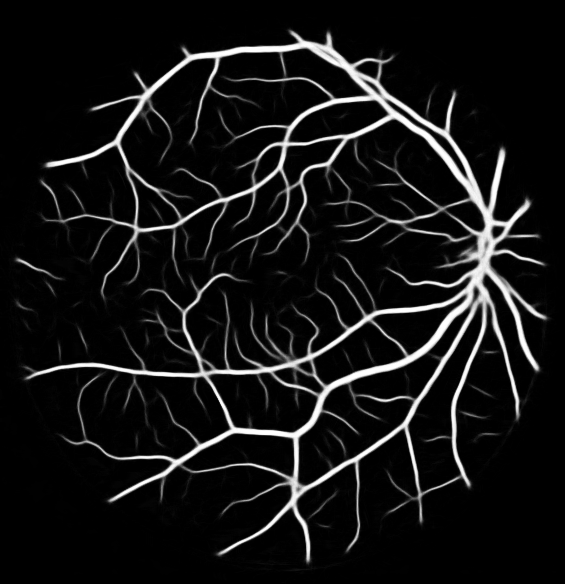

For the BSDS500 we show the results for every image in the test set. For the NYU RGBD, we sorted the test results according to the difference between the F-measures of our method and Dollar et al. method and then sampled 200 results with uniform spacing. For the DRIVE dataset we provide all test set results of our method.