Speeding-up CNNs

We propose a new approach for speeding up layers within convolutional networks.

Method description:

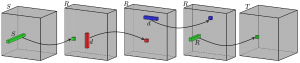

- decompose a 4D convolutional tensor into a product of four 2D tensors (via package), and use them to replace the full convolution with a chain of four reduced convolutions

- fine-tune the entire CNN on training data

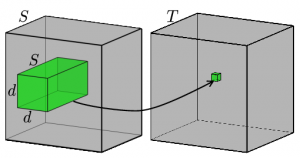

Convolutional layer before application of our method:

In our four-component decomposition based on CP-decomposition, first and last convolutions are 1×1. Two middle convolutions are 1xd and dx1, done independently in each channel. R is a number of components in the decomposition and in the same time number of channels in intermediate convolution. This parameter provides a tradeoff between speed and accuracy of the method. No custom layer implementation is needed since this structure can be constructed from already implemented layers in Caffe.

We evaluate this approach on two CNNs and show that it is competitive with previous approaches, leading to higher obtained CPU speedups at the cost of lower accuracy drops for the smaller of the two networks. Thus, for the 36-class character classification CNN, our approach obtains a 8.5x CPU speedup of the whole network with only minor accuracy drop (1% from 91% to 90%). For the standard ImageNet architecture (AlexNet), the approach speeds up the second convolution layer by a factor of 4x at the cost of 1% increase of the overall top-5 classification error.